solveit by hand

solveit by hand

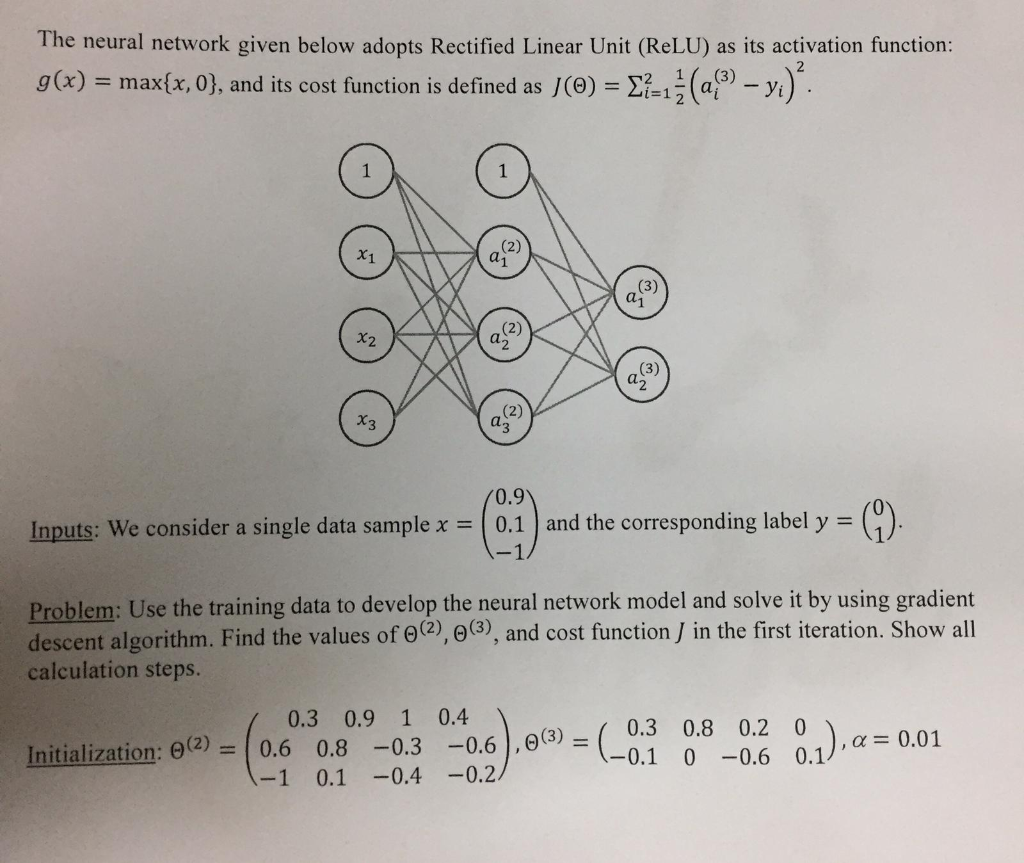

The neural network given below adopts Rectified Linear Unit (ReLU) as its activation function: g (x) max(x, 0), and its cost function is defined as/(9)-Σ-11 (4P-yi) X1 X2 0.9 Inpauts: We consider asgedata sample01and the corrsponding label y Problem: Use the training data to develop the neural network model and solve it by using gradient descent algorithm. Find the values of Θ(2) Θ(3), and cost function J in the first iteration. Show all calculation steps.

OR

OR